Modularity is a cornerstone of good application design. As

systems become more distributed, we’re faced with unique challenges to

achieving effective modularity. How do you organize, encapsulate, and version

loosely-coupled services?

In this series of posts, I will cover how modular

architectures were built for two diverse Java-based applications: a

highly reliable SOA tax processing platform that interfaces with legacy systems;

and a low-latency, event-based system for FX currency trading. Modularity was achieved OSGi,

Service Component Architecture (SCA), and Fabric3 as the

runtime stack.

This post will start with a brief overview of the technologies

involved in creating these modular systems and proceed to a detailed discussion

of how they were used to build the SOA tax processing platform for a European

government. In a subsequent post, we will cover how the same modularity

techniques were applied to successfully deliver the low-latency FX trading

architecture to a major bank.

From OSGi to Service Composition

There is no one technology that offers a complete modularity

solution. That’s because modularity

provides a number of features and exists at a number of levels in an

application.

In terms of features, modularity:

- Reduces complexity by segmenting code into discrete units

- Provides a mechanism for change by allowing application components to be versioned

- Promotes reuse by defining contracts between subsystems

Modularity is also present at different levels of an

application:

While much of the above diagram will be familiar to Java

developers, it’s worth defining what we mean by service composition and

architectural modularity. Service-Orientation (organizing application logic

into contract-based units) and Dependency Injection (popularized by frameworks

Such as Spring and Guice, among others) are the foundation of modern architectural

modularity. In a nutshell, both help to decouple subsystems, thereby making an

application more modular.

Like Object-Orientation, what’s needed is a way to group

collections of services together for better management and mechanism to encapsulate

the implementation details of particular services:

Fabric3 provides such a composition mechanism that works well

for both SOA as well as event-driven designs.

I’ll now turn to how this was achieved in a tax-processing system and a FX

Trading platform.

I’ve deliberately chosen these two examples because each

application has a different set of requirements. The tax system is what many

would label a SOA integration platform: it receives asynchronous requests for

tax data, interfaces with a number of legacy systems, process the results, and

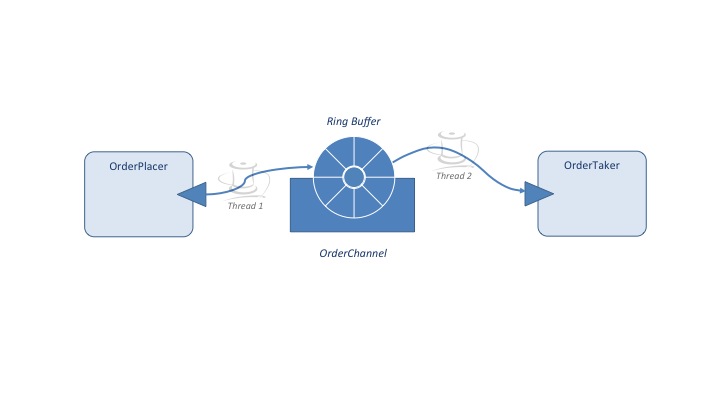

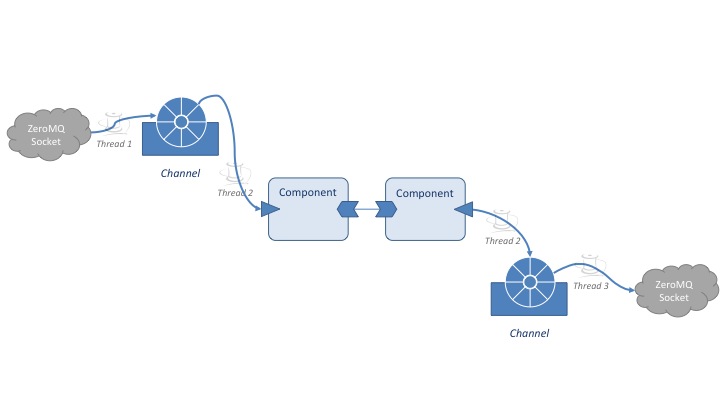

sends a response to the requesting party. The FX system, in contrast, is

concerned with extreme (microsecond) latencies: it receives streams of market

data, processes them, and in turn provides derived foreign exchange pricing

feeds to client systems.

SOA Modularity

The tax system architecture looks like this:

Tax System Architecture

Tax information requests are received via a custom reliable messaging layer to a gateway service, which transactionally persists the message request and initiates processing. Processing takes place in a number of steps using a series of complex rules and interactions with multiple legacy systems. When data has been received and processed, a response is sent via the messaging layer to the requestor.

The principal modularity challenge faced when designing the

system was to separate the core processing (a state machine that transitions a

request through various stages) from the rules evaluation and logic that connects

to the legacy systems.

A key goal of modularizing the various subsystems was to

provide a straightforward versioning mechanism. For example, tax rules typically

change every tax year. Consequently, existing rules had to be preserved (to

handle requests for data involving previous tax years) alongside the current

year rules. Modularizing the rules allowed for them to be updated without

affecting other parts of the system.

The parts of the application that interfaced with the legacy

systems to retrieve tax data were also isolated in a module. Similar to the

rules, this allows changes to the way external interfaces are made to be

altered without impacting the rest of the system. Modularity served an

additional practical purpose: the code to interface with the legacy systems was

complex and tedious. By segmenting that complexity, the overall system was made

easier to understand and maintain.

What did this modularity translate to in practice? The development

environment was setup as a Maven multi-module build. The base API modules

contain Java interfaces for various services. Individual modules for core

processing, rules, and integration depends on relevant API modules:

The multi-module build enforces development-time modularity.

For example, the rules module cannot reference classes in the integration

module. OSGI is used for runtime code modularity. The API modules export

packages containing the service interfaces while each dependent module imports

the API interfaces it requires.

The tax system uses service composition to enforce

modularity at the service level. The

core processing, rules, and integration subsystems are all composed of multiple

fine-grained services. The integration subsystem in particular exposes a single

interface for receiving requests from the core processing module. This request

is then passed through a series of services that invoke legacy systems using

Web Services (WS-*):

Tax System Integration Module

Service composition is handled in Fabric3 by using SCAcomposites.

Similar to a Spring application context, a composite specifies a set of

components and their wiring. In this example, we use XML to define the

composite (The next version of Fabric3 will also support a Java-based DSL):

The Integration Module Composite

As its name implies, a Composite provides a way to compose

coarser-grained services from private, finer-grained ones. In the above

example, the service element promotes, or exposes, the TaxSystem

service as the public interface of the composite.

Service Promotion

When this is done, client services in the core processing

module can reference the TaxSystem integration composite as a single service:

With composites in place, the tax system successfully delivered a consistent modular design from the code layer to its service architecture:

Service Architecture Modularity

After more than a year in production, the investment in this

modular design paid off. The integration module was re-written to take

advantage of new, significantly different legacy system interfaces without the

need to refactor the other subsystems.

****

In the next post, we will cover how service composition was

used to modularize a low-latency, event-based FX trading platform. In this

case, service composition was employed to simplify the system architecture and

provide a mechanism for writing custom plugins while maintaining

sub-millisecond performance.